cognesy / instructor-php

Structured data extraction in PHP, powered by LLMs

Installs: 100 993

Dependents: 1

Suggesters: 0

Security: 0

Stars: 308

Watchers: 7

Forks: 23

Open Issues: 3

pkg:composer/cognesy/instructor-php

Requires

- php: ^8.3|^8.4|^8.5

- ext-ctype: *

- adbario/php-dot-notation: ^3.3

- aimeos/map: ^3.8

- gioni06/gpt3-tokenizer: ^1.2

- phpdocumentor/reflection-docblock: ^5.6

- psr/event-dispatcher: ^1.0

- psr/http-client: ^1.0

- psr/http-message: ^2.0

- psr/log: ^3.0

- symfony/console: ^7.1

- symfony/filesystem: ^7.1

- symfony/property-access: ^7.0

- symfony/property-info: ^7.0

- symfony/serializer: ^7.0

- symfony/validator: ^7.0

- symfony/var-dumper: ^7.0

- symfony/yaml: ^7.0

- vlucas/phpdotenv: ^5.6

Requires (Dev)

- ext-curl: *

- ext-dom: *

- ext-fileinfo: *

- ext-libxml: *

- ext-simplexml: *

- ext-xmlreader: *

- cebe/markdown: ^1.2

- eftec/bladeone: ^4.16

- guzzlehttp/guzzle: ^7.0

- guzzlehttp/psr7: ^2.0

- icanhazstring/composer-unused: ^0.9.0

- illuminate/database: ^11.47

- illuminate/http: ^11.0

- illuminate/support: ^11.47

- jetbrains/phpstorm-attributes: ^1.2

- league/commonmark: ^2.7

- league/html-to-markdown: ^5.1

- maglnet/composer-require-checker: ^4.16

- mockery/mockery: ^1.6

- monolog/monolog: ^3.0

- nikic/iter: ^2.4

- pestphp/pest: ^4.0

- phpbench/phpbench: ^1.4

- phpstan/phpstan: ^1.11

- phpstan/phpstan-strict-rules: ^1.6

- psr/http-factory-implementation: *

- shipmonk/dead-code-detector: ^0.5.1

- spatie/browsershot: ^5.1

- symfony/css-selector: ^7.0

- symfony/dom-crawler: ^7.0

- symfony/http-client: ^7.0

- toolkit/cli-utils: ^2.0

- twig/twig: ^3.0

- vimeo/psalm: ^6.0

Suggests

- ext-dom: Used by Cognesy\Auxiliary\Web\Html for HTML parsing

- ext-fileinfo: Used by Cognesy\Addon\Image\Image for MIME information extraction

- ext-libxml: Used by Cognesy\Utils\Template\Template for prompt template support

- ext-simplexml: Used by Cognesy\Utils\Template\Template for prompt template support

- ext-xmlreader: Used by Cognesy\Utils\Template\Template for prompt template support

- cognesy/instructor-laravel: For Laravel facades, testing fakes, and framework integration

- eftec/bladeone: For BladeOne template engine support

- guzzlehttp/guzzle: For Guzzle HTTP client support

- guzzlehttp/psr7: For Guzzle PSR-7 support

- spatie/browsershot: Used by Cognesy\Auxiliary\Web for Browsershot web crawler support

- symfony/http-client: For Symfony HTTP client support

- symfony/http-client-contracts: For Symfony HTTP client support

- twig/twig: For Twig template engine support

- dev-main

- v1.22.0

- v1.21.0

- v1.20.0

- v1.19.0

- v1.18.4

- v1.18.3

- v1.18.2

- v1.18.1

- v1.18.0

- v1.17.0

- v1.16.0

- v1.15.0

- v1.14.0

- v1.13.0

- v1.12.0

- v1.11.0

- v1.10.3

- v1.10.2

- v1.10.1

- v1.10.0

- v1.9.1

- v1.9.0

- v1.8.1

- v1.8.0

- v1.7.0

- v1.6.0

- v1.5.0

- v1.4.2

- v1.4.1

- v1.4.0

- v1.3.0

- v1.2.0

- v1.1.0

- v1.0.0

- v1.0.0-RC22

- v1.0.0-RC21

- v1.0.0-RC20

- v1.0.0-RC19

- v1.0.0-RC18

- v1.0.0-RC17

- v1.0.0-RC16

- v1.0.0-RC15

- v1.0.0-RC14

- v1.0.0-RC13

- v1.0.0-RC12

- v1.0.0-RC11

- v1.0.0-RC10

- v1.0.0-RC9

- v1.0.0-RC8

- v1.0.0-RC7

- v1.0.0-RC6

- v1.0.0-rc5

- v1.0-rc4

- v1.0-rc3

- v1.0-rc2

- v1.0-rc1

- v0.17.11

- v0.17.10

- v0.17.8

- v0.17.7

- v0.17.6

- v0.17.5

- v0.17.3

- v0.17.2

- v0.17.1

- v0.17.0

- v0.16.4

- v0.16.3

- v0.16.2

- v0.16.1

- v0.16.0

- v0.15.2

- v0.15.1

- v0.15.0

- v0.14.7

- v0.14.6

- v0.14.5

- v0.14.4

- v0.14.3

- v0.14.2

- v0.14.1

- v0.14.0

- v0.13.9

- v0.13.8

- v0.13.7

- v0.13.6

- v0.13.5

- v0.13.4

- v0.13.3

- v0.13.2

- v0.13.1

- v0.13.0

- v0.12.13

- v0.12.12

- v0.12.11

- v0.12.9

- v0.12.8

- v0.12.7

- v0.12.6

- v0.12.5

- v0.12.4

- v0.12.3

- v0.12.2

- v0.12.1

- v0.12.0

- v0.11.5

- v0.11.4

- v0.11.3

- v0.11.2

- v0.11.1

- v0.11.0

- v0.10.17

- v0.10.16

- v0.10.15

- v0.10.14

- v0.10.13

- v0.10.12

- v0.10.11

- 0.10.10

- v0.10.9

- v0.10.8

- v0.10.7

- v0.10.6

- v0.10.5

- v0.10.4

- v0.10.3

- v0.10.2

- v0.10.1

- v0.10.0

- v0.9.10

- v0.9.9

- v0.9.8

- v0.9.7

- v0.9.6

- v0.9.5

- v0.9.4

- v0.9.3

- v0.9.2

- v0.9.1

- v0.9.0

- v0.8.20

- v0.8.19

- v0.8.18

- v0.8.17

- v0.8.16

- v0.8.15

- v0.8.14

- v0.8.13

- v0.8.12

- v0.8.11

- v0.8.10

- v0.8.9

- v0.8.8

- v0.8.7

- v0.8.6

- v0.8.5

- v0.8.4

- v0.8.3

- v0.8.2

- v0.8.1

- v0.8

- v0.7.1

- v0.7.0

- v0.6.6

- v0.6.5

- v0.6.4

- v0.6.3

- v0.6.2

- v0.6.1

- v0.6.0

- v0.5.1

- v0.5.0

- v0.4.3

- v0.4.2

- v0.4.1

- v0.4.0

- v0.3.0

- v0.2.2

- v0.2.1

- v0.2.0

- v0.1.0

- v0.0.8

- v0.0.7

- v0.0.6

- v0.0.5

- v0.0.4

- v0.0.3

- v0.0.2

- v0.0.1

- dev-claude/prepare-database-01EzbyCbTvevucyZ5Pj6JRmJ

- dev-with-saloonphp-client

- dev-london

This package is auto-updated.

Last update: 2026-02-23 01:31:59 UTC

README

This monorepo contains a set of dev-friendly, framework agnostic components offering 3 main capabilities:

- Instructor for PHP - structured data extraction in PHP - powered by LLMs, designed for simplicity, transparency, and control; supports custom LLM output processors (not just JSON),

- Polyglot for PHP - unified LLM API - write code once, deploy with any LLM provider: OpenAI chat completions API, OpenAI responses API, Anthropic, Gemini, Ollama, etc.; you can write own LLM drivers,

- Agent SDK for PHP - lightweight but powerful agent SDK, supports custom tools, lifecycle hooks, subagents, context management, custom stop / continuation criteria, observability via events, packaged capabilities, agent templates, session management, and more.

Read More

- Official website https://instructorphp.com

- Docs website (Mintlify) https://docs.instructorphp.com

- Docs (Github Pages) https://cognesy.github.io/instructor-php/

What is Instructor?

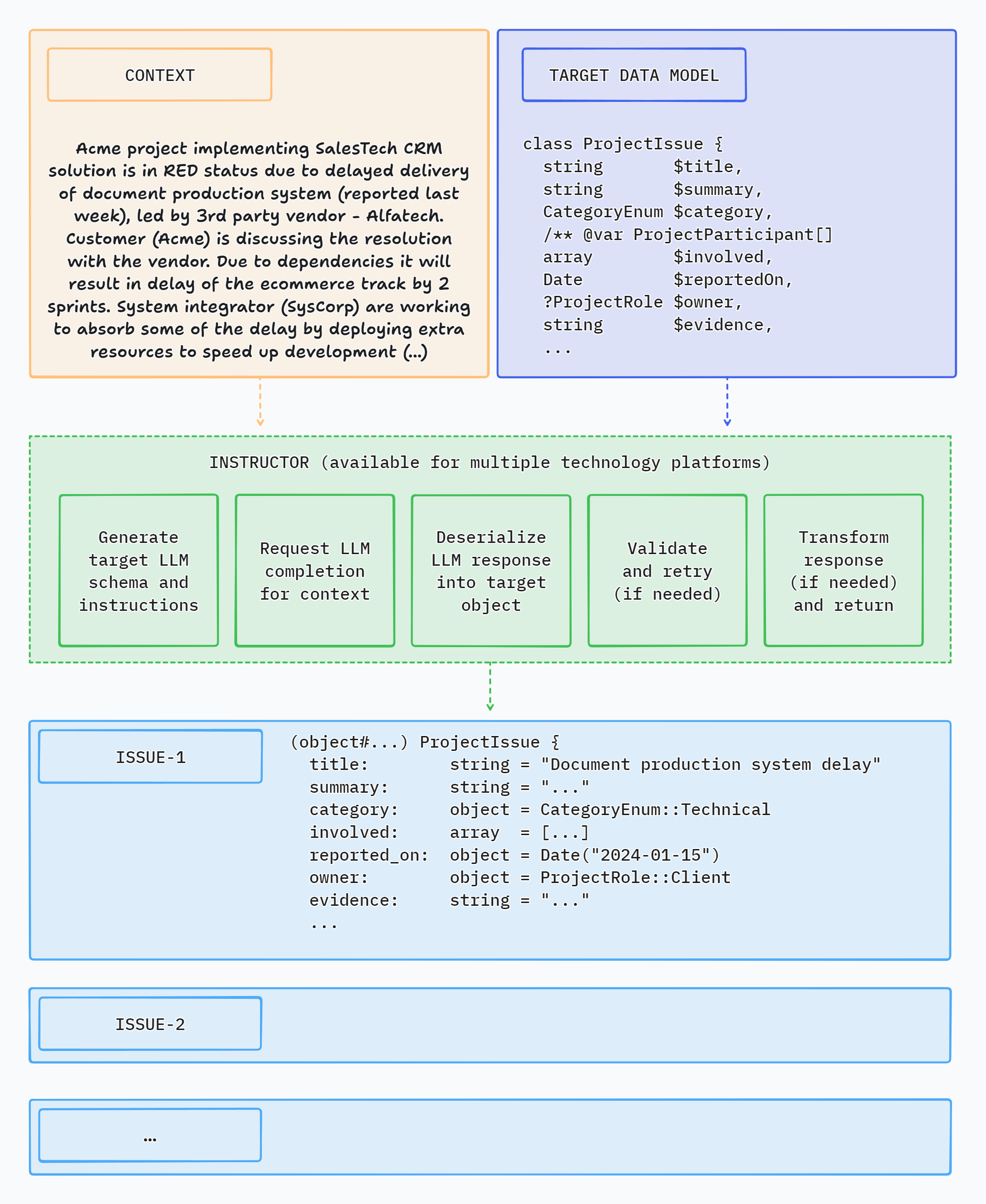

Instructor is a library that allows you to extract structured, validated data from multiple types of inputs: text, images or OpenAI style chat sequence arrays. It is powered by Large Language Models (LLMs).

Instructor simplifies LLM integration in PHP projects. It handles the complexity of extracting structured data from LLM outputs, so you can focus on building your application logic and iterate faster.

Instructor for PHP is inspired by the Instructor library for Python created by Jason Liu.

Here's a simple CLI demo app using Instructor to extract structured data from text:

How Instructor Enhances Your Workflow

Instructor introduces three key enhancements compared to direct API usage.

Response Model

Specify a PHP class to extract data into via the 'magic' of LLM chat completion. And that's it.

Instructor reduces brittleness of the code extracting the information from textual data by leveraging structured LLM responses.

Instructor helps you write simpler, easier to understand code: you no longer have to define lengthy function call definitions or write code for assigning returned JSON into target data objects.

Validation

Response model generated by LLM can be automatically validated, following set of rules. Currently, Instructor supports only Symfony validation.

You can also provide a context object to use enhanced validator capabilities.

Max Retries

You can set the number of retry attempts for requests.

Instructor will repeat requests in case of validation or deserialization error up to the specified number of times, trying to get a valid response from LLM.

Support for LLM Providers

Instructor offers out-of-the-box support for the following LLM providers:

- A21 / Mamba

- Anthropic

- Azure OpenAI

- Cerebras

- Cohere (v2 OpenAI compatible)

- Deepseek

- Fireworks

- Google Gemini (native and OpenAI compatible)

- Groq

- HuggingFace

- Inception

- Minimaxi

- Mistral

- Moonshot / Kimi

- Ollama (on localhost)

- OpenAI

- OpenRouter

- Perplexity

- Sambanova

- xAI / Grok

For usage examples, check Hub section or examples directory in the code repository.

Usage

Installation

You can install Instructor via Composer:

composer require cognesy/instructor-php

Basic Example

This is a simple example demonstrating how Instructor retrieves structured information from provided text (or chat message sequence).

Response model class is a plain PHP class with typehints specifying the types of fields of the object.

use Cognesy\Instructor\StructuredOutput; // Step 0: Create .env file in your project root: // OPENAI_API_KEY=your_api_key // Step 1: Define target data structure(s) class Person { public string $name; public int $age; } // Step 2: Provide content to process $text = "His name is Jason and he is 28 years old."; // Step 3: Use Instructor to run LLM inference $person = (new StructuredOutput) ->withResponseClass(Person::class) ->withMessages($text) ->get(); // Step 4: Work with structured response data assert($person instanceof Person); // true assert($person->name === 'Jason'); // true assert($person->age === 28); // true echo $person->name; // Jason echo $person->age; // 28 var_dump($person); // Person { // name: "Jason", // age: 28 // }

NOTE: Instructor supports classes / objects as response models. In case you want to extract simple types or enums, you need to wrap them in Scalar adapter - see section below: Extracting Scalar Values.

Validation

Instructor validates results of LLM response against validation rules specified in your data model.

For further details on available validation rules, check Symfony Validation constraints.

use Symfony\Component\Validator\Constraints as Assert; class Person { public string $name; #[Assert\PositiveOrZero] public int $age; } $text = "His name is Jason, he is -28 years old."; $person = (new StructuredOutput) ->withResponseClass(Person::class) ->with( messages: [['role' => 'user', 'content' => $text]], ) ->get(); // if the resulting object does not validate, Instructor throws an exception

Max Retries

In case maxRetries parameter is provided and LLM response does not meet validation criteria, Instructor will make subsequent inference attempts until results meet the requirements or maxRetries is reached.

Instructor uses validation errors to inform LLM on the problems identified in the response, so that LLM can try self-correcting in the next attempt.

use Cognesy\Instructor\StructuredOutput; use Symfony\Component\Validator\Constraints as Assert; class Person { #[Assert\Length(min: 3)] public string $name; #[Assert\PositiveOrZero] public int $age; } $text = "His name is JX, aka Jason, he is -28 years old."; $person = (new StructuredOutput) ->with( messages: [['role' => 'user', 'content' => $text]], responseModel: Person::class, maxRetries: 3, ) ->get(); // if all LLM's attempts to self-correct the results fail, Instructor throws an exception

Output Modes

Instructor supports multiple output modes to allow working with various models depending on their capabilities.

OutputMode::Json- generate structured output via LLM's native JSON generationOutputMode::JsonSchema- use LLM's strict JSON Schema mode to enforce JSON SchemaOutputMode::Tools- use tool calling API to get LLM follow provided schemaOutputMode::MdJson- use prompting to generate structured output; fallback for the models that do not support JSON generation or tool calling

Additionally, you can use OutputMode::Text to get LLM to generate text output without any structured data extraction.

OutputMode::Text- generate text outputOutputMode::Unrestricted- generate unrestricted output based on inputs provided by the user (with no enforcement of specific output format)

Unified LLM API

Instructor ecosystem uses Polyglot as an unified inference API layer supporting 20+ LLM providers.

Polyglot takes care of translation of familiar OpenAI chat completion API conventions into LLM provider specific idioms / APIs, so you can easily switch between LLM providers without rewriting your LLM connectivity code.

Example (using sync API)

$answer = (new Inference) ->using('openai') // specify LLM connection preset (defined in config) ->with(messages: 'What is capital of Germany') ->get(); echo $answer;

Example (using streaming API)

$stream = (new Inference) ->using('anthropic') // specify LLM connection preset (defined in config) ->withMessages([['role' => 'user', 'content' => 'Describe capital of Brasil']]) ->withOptions(['max_tokens' => 256]) ->withStreaming() ->stream() ->responses(); foreach ($stream as $partial) { echo $partial->contentDelta; }

Example (customize LLM connection)

$config = new LLMConfig( apiUrl : 'https://api.deepseek.com', apiKey : Env::get('DEEPSEEK_API_KEY'), endpoint: '/chat/completions', model: 'deepseek-chat', maxTokens: 128, driver: 'deepseek', ); $answer = (new Inference) ->withConfig($config) ->withMessages([['role' => 'user', 'content' => 'What is the capital of France']]) ->withOptions(['max_tokens' => 64]) ->withStreaming() ->get(); echo $answer;

Documentation

Check out the documentation website for more details and examples of how to use Instructor for PHP.

Feature Highlights

Core features

- Get structured responses from LLMs without writing boilerplate code

- Validation of returned data

- Automated retries in case of errors when LLM responds with invalid data

- Integrate LLM support into your existing PHP code with minimal friction - no framework, no extensive code changes

- Framework agnostic - use it with Laravel, Symfony, your custom framework, or - with no framework at all

Various extraction modes

- Supports multiple extraction modes to allow working with various models depending on their capabilities

OutputMode::Json- use response_format to get LLM follow provided JSON SchemaOutputMode::JsonSchema- use strict JSON Schema mode to get LLM follow provided JSON SchemaOutputMode::Tools- use tool calling API to get LLM follow provided JSON SchemaOutputMode::MdJson- extract via prompting LLM to nudge it to generate provided JSON Schema

Flexible inputs

- Process various types of input data: text, series of chat messages or images using the same, simple API

- 'Structured-to-structured' processing - provide object or array as an input and get object with the results of inference back

- Demonstrate examples to improve the quality of inference

Customization

- Define response data model the way you want: type-hinted classes, JSON Schema arrays, or dynamic data shapes with

Structureclass - Customize prompts and retry prompts

- Use attributes or PHP DocBlocks to provide additional instructions for LLM

- Customize response model processing by providing your own implementation of schema, deserialization, validation and transformation interfaces

Sync and streaming support

- Supports both synchronous or streaming responses

- Get partial updates & stream completed sequence items

Observability

- Get detailed insight into internal processing via events

- Debug mode to see the details of LLM API requests and responses

Support for multiple LLMs / API providers

- Easily switch between LLM providers

- Support for most popular LLM APIs (incl. OpenAI, Gemini, Anthropic, Cohere, Azure, Groq, Mistral, Fireworks AI, Together AI)

- OpenRouter support - access to 100+ language models

- Use local models with Ollama

Other capabilities

- Developer friendly LLM context caching for reduced costs and faster inference (for Anthropic models)

- Developer friendly data extraction from images (for OpenAI, Anthropic and Gemini models)

- Generate vector embeddings using APIs of multiple supported LLM providers

Documentation and examples

- Learn more from growing documentation and 100+ cookbooks

Instructor in Other Languages

Check out implementations in other languages below:

- Python (original)

- Javascript (port)

- Elixir (port)

- Ruby (port)

If you want to port Instructor to another language, please reach out to us on Twitter we'd love to help you get started!

Instructor Packages

This repository is a monorepo containing all Instructor's components (required and optional). It hosts all that you need to work with LLMs via Instructor.

Individual components are also distributed as standalone packages that can be used independently.

Links to read-only repositories of the standalone package distributions:

- instructor-addons - extra capabilities and common LLM-related problem solutions

- instructor-aux - external tools and integrations, e.g. used by Instructor examples

- instructor-config - configuration management for Instructor

- instructor-evals - LLM output evaluation tools

- instructor-events - events and event listeners for Instructor

- instructor-http-client - easily switch between underlying HTTP client libraries (out-of-the-box support for Guzzle, Symfony, Laravel)

- instructor-hub - CLI tool for browsing and running Instructor examples

- instructor-messages - chat message sequence handling for Instructor

- instructor-polyglot - use single API for inference and embeddings across most of LLM providers, easily switch between them (e.g., develop on Ollama, switch to Groq in production)

- instructor-schema - object schema handling for Instructor

- instructor-setup - CLI tool for publishing Instructor config files in your app

- instructor-struct - get dev friendly structured outputs from LLMs

- instructor-tell - CLI tool for executing LLM prompts in your terminal

- instructor-templates - text and chat template tools used by Instructor, support Twig, Blade and ArrowPipe formats

- instructor-utils - common utility classes used by Instructor packages

NOTE: If you are just starting to use Instructor, I recommend using the

instructor-phppackage. It contains all the required components and is the easiest way to get started with the library.

License

This project is licensed under the terms of the MIT License.

Support

If you have any questions or need help, please reach out to me on Twitter or GitHub.

Contributing

If you want to help, check out some of the issues. All contributions are welcome - code improvements, documentation, bug reports, blog posts / articles, or new cookbooks and application examples.