padosoft / laravel-super-cache

A Laravel package for advanced caching with tags and namespaces in Redis.

Installs: 6 921

Dependents: 0

Suggesters: 0

Security: 0

Stars: 2

Watchers: 1

Forks: 2

Open Issues: 0

pkg:composer/padosoft/laravel-super-cache

Requires

- php: ^8.0

- ext-redis: *

- illuminate/support: ^9.0|^10.0|^11.0|^12.0

- laravel/framework: ^9.0|^10.0|^11.0|^12.0

- predis/predis: ^2.0

Requires (Dev)

- friendsofphp/php-cs-fixer: ^3.15

- laradumps/laradumps: 3.0|^4.0

- larastan/larastan: ^2.5.1|^3.0

- laravel/pint: ^1.16

- m6web/redis-mock: ^5.6

- mockery/mockery: ^1.5

- nunomaduro/collision: ^7.0|^8.0

- orchestra/testbench: ^7.0|^8.0|^9.0|^10.0

- phpcompatibility/php-compatibility: ^9.3

- phpmd/phpmd: ^2.15

- phpunit/phpunit: ^9.5|^10.0|^11.0

- spatie/laravel-ignition: ^2.3

- spatie/laravel-ray: ^1.32

- squizlabs/php_codesniffer: ^3.7

- dev-main

- v2.1.3

- v2.1.2

- v2.1.1

- v2.1.0

- v2.0.4

- v2.0.3

- v2.0.2

- v2.0.1

- v2.0.0

- v2.0-beta.9

- v2.0-beta.8

- v2.0-beta.7

- v2.0-beta.6

- v2.0-beta.5

- v2.0-beta.4

- v2.0-beta.3

- v2.0-beta.2

- v2.0-beta.1

- v2.0.0-beta

- v2.0.0-alpha

- v1.1.2

- v1.1.1

- v1.1.0

- v1.0.9

- v1.0.8

- v1.0.7

- v1.0.6

- v1.0.5

- v1.0.4

- v1.0.3

- v1.0.2

- v1.0.1

- v1.0.0

- v1.0.0-b.2

- v1.0.0-b.1

- dev-copilot/add-circleci-test-php85-laravel12

- dev-copilot/fix-rememberwithtags-cache-issue

- dev-junie-init

- dev-m-jb-nuova-versione-cluster

- dev-dependabot/composer/league/commonmark-2.6.0

- dev-m-jb-test-super-cache

This package is auto-updated.

Last update: 2026-02-07 17:10:32 UTC

README

A powerful caching solution for Laravel that uses Redis with Lua scripting, batch processing, and optimized tag management to handle high volumes of keys efficiently.

Table of Contents

- Purpose

- Why Use This Package?

- Features

- Requires

- Installation and Configuration

- Usage Examples

- Architecture Overview

- Design Decisions and Performance

Purpose

laravel-super-cache is designed to provide a high-performance, reliable, and scalable caching solution for Laravel applications that require efficient tag-based cache invalidation. By leveraging Redis's native capabilities and optimizing the way tags are managed, this package addresses limitations in Laravel's built-in cache tag system, making it suitable for large-scale enterprise applications.

Why Use This Package?

Laravel's native caching mechanism has an implementation for tag-based cache management; however, it faces limitations, especially when dealing with high volumes of cache keys and frequent invalidations. Some of the issues include:

- Inconsistency with Tag Invalidation: Laravel uses a versioned tag strategy, which can lead to keys not being properly invalidated when associated tags change, particularly in highly concurrent environments.

- Performance Overhead: The default implementation in Laravel relies on a "soft invalidate" mechanism that increments tag versions instead of directly removing keys, which can lead to slower cache operations and memory growth (memory leaks).

- Scalability Issues: The handling of large volumes of tags and keys is not optimized for performance, making it difficult to maintain consistency and speed in enterprise-level applications.

laravel-super-cache addresses these limitations by providing an efficient, high-performance caching layer optimized for Redis, leveraging Lua scripting, and batch processing techniques.

Features

- Architecture for High Volume: Designed to handle thousands of cache keys with rapid creation and deletion, without performance degradation.

- Enterprise-Level Performance: Uses Redis's native capabilities and optimizes cache storage and retrieval for high-speed operations.

- Use of Lua Scripting for Efficiency: Employs Lua scripts for atomic operations, reducing network round-trips and improving consistency.

- Batch Processing and Pipelining: Processes cache operations in batches, reducing overhead and maximizing throughput.

- Parallel Processing with Namespaces: Enables parallel processing of expiry notifications by using namespace suffixing and configurable listeners.

Requires

- php: >=8.0

- illuminate/database: ^9.0|^10.0|^11.0

- illuminate/support: ^9.0|^10.0|^11.0

Installation and Configuration

To install laravel-super-cache, use Composer:

composer require padosoft/laravel-super-cache

After installing the package, add to in the config/app.php file of your main project

'providers' => [ /* * Laravel Framework Service Providers... */ Illuminate\Auth\AuthServiceProvider::class, ... /* * Super Cache */ Padosoft\SuperCache\SuperCacheServiceProvider::class, ],

and then publish the configuration file:

php artisan vendor:publish --provider="Padosoft\SuperCache\SuperCacheServiceProvider"

The configuration file allows you to set:

prefix: The prefix for all cache keys, preventing conflicts when using the same Redis instance.connection: The Redis connection to use, as defined in yourconfig/database.php.SUPERCACHE_NUM_SHARDS: The number of shards to optimize performance for tag management.retry_max,batch_size, andtime_threshold: Parameters to optimize batch processing and retries.

Activating the Listener for Expiry Notifications

The listener is responsible for handling expired cache keys and cleaning up associated tags.

To activate it, add the following command to your supervisor configuration:

[program:supercache_listener] command=php artisan supercache:listener {namespace} numprocs=5

You can run multiple processes in parallel by setting different {namespace} values. This allows the listener to handle notifications in parallel for optimal performance.

Enabling Redis Expiry Notifications (required for listener)

To enable the expiry notifications required by laravel-super-cache, you need to configure Redis (or AWS ElastiCache) to send EXPIRED events.

Here's how you can do it:

For a Standard Redis Instance

-

Edit Redis Configuration: Open the

redis.conffile and set thenotify-keyspace-eventsparameter to enable expiry notifications.notify-keyspace-events ExThis configuration enables notifications for key expirations (Ex), which is required for the listener to function correctly.

-

Using Redis CLI: Alternatively, you can use the Redis CLI to set the configuration without editing the file directly.

redis-cli config set notify-keyspace-events Ex

This command will apply the changes immediately without needing a Redis restart.

For AWS ElastiCache Redis

If you are using Redis on AWS ElastiCache, follow these steps to enable expiry notifications:

-

Access the AWS ElastiCache Console:

- Go to the ElastiCache dashboard on your AWS account.

-

Locate Your Redis Cluster:

- Find your cluster or replication group that you want to configure.

-

Modify the Parameter Group:

- Go to the Parameter Groups section.

- Find the parameter group associated with your cluster, or create a new one.

- Search for the

notify-keyspace-eventsparameter and set its value toEx. - Save changes to the parameter group.

-

Attach the Parameter Group to Your Redis Cluster:

- Attach the modified parameter group to your Redis cluster.

- A cluster reboot may be required for the changes to take effect.

After configuring the notify-keyspace-events parameter, Redis will publish EXPIRED events when keys expire, allowing the laravel-super-cache listener to process these events correctly.

Assicurarsi che php abbia questo parametro, altrimenti dopo 60 secondo il comando esce perchè la connessione viene interrotta

default_socket_timeout = -1

Scheduled Command for Orphaned Key Cleanup (Optionally but recommended)

Optionally but recommended, a scheduled command can be configured to periodically clean up any orphaned keys or sets left due to unexpected interruptions or errors. This adds an additional safety net to maintain consistency across cache keys and tags.

php artisan supercache:clean

This can be scheduled using Laravel's scheduler to run at appropriate intervals, ensuring your cache remains clean and optimized.

Usage Examples

Below are examples of using the SuperCacheManager and its facade SuperCache:

use SuperCache\Facades\SuperCache; // Store an item in cache SuperCache::put('user:1', $user); // Store an item in cache with tags SuperCache::putWithTags('product:1', $product, ['products', 'featured']); // Retrieve an item from cache $product = SuperCache::get('product:1'); // Check if a key exists $exists = SuperCache::has('user:1'); // Increment a counter SuperCache::increment('views:product:1'); // Decrement a counter SuperCache::decrement('stock:product:1', 5); // Get all keys matching a pattern $keys = SuperCache::getKeys(['product:*']); // Flush all cache SuperCache::flush();

For all options see SuperCacheManager class.

Architecture Overview

Design Decisions and Performance

Key and Tag Organization

Each cache key is stored with an associated set of tags. These tags allow efficient invalidation when certain categories of keys need to be cleared. The structure ensures that any cache invalidation affects only the necessary keys without touching unrelated data.

Each cache key is stored with an associated set of tags. These tags allow efficient invalidation when certain categories of keys need to be cleared. The structure ensures that any cache invalidation affects only the necessary keys without touching unrelated data.

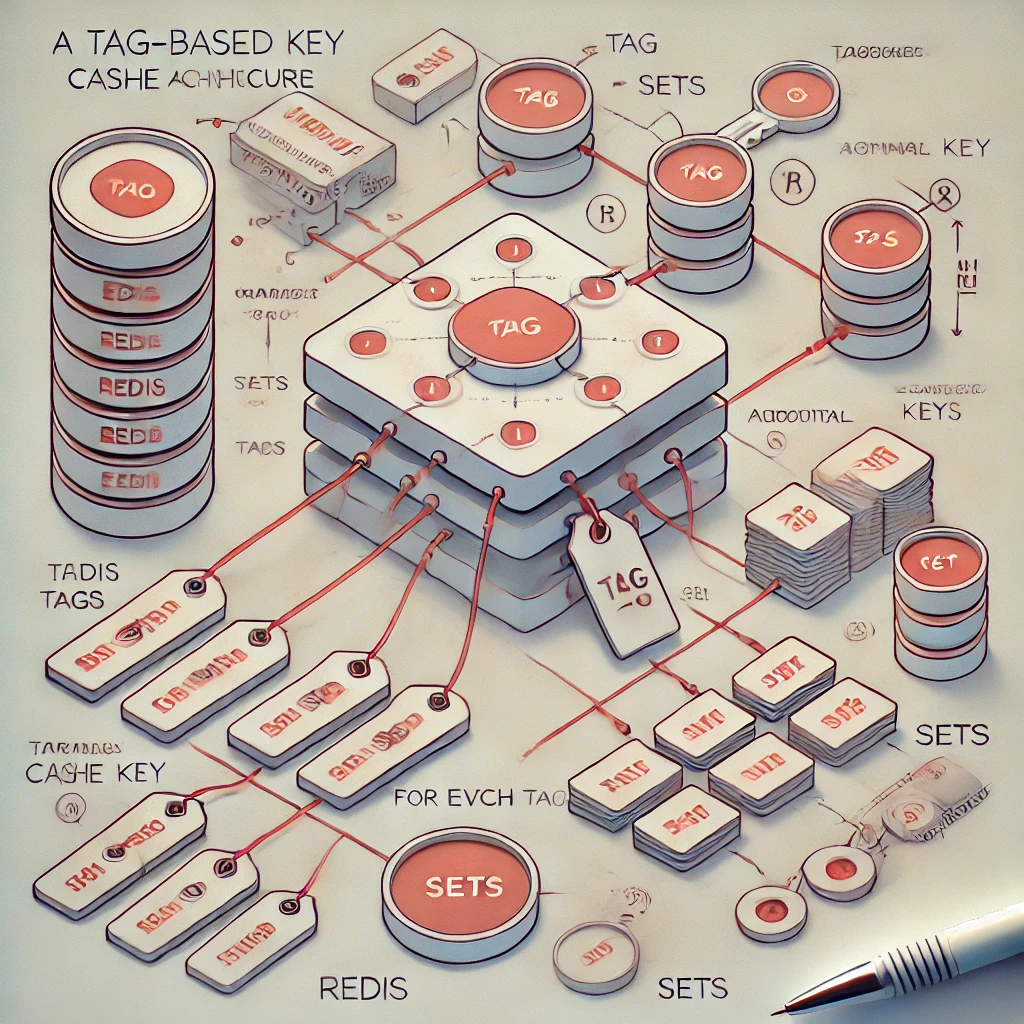

Tag-Based Cache Architecture

To efficiently handle cache keys and their associated tags, laravel-super-cache employs a well-defined architecture using Redis data structures. This ensures high performance for lookups, tag invalidations, and efficient management of keys. Below is a breakdown of how the package manages and stores these data structures in Redis.

3 Main Structures in Redis

-

Key-Value Storage:

- Each cache entry is stored as a key-value pair in Redis.

- Naming Convention: The cache key is prefixed for the package (e.g.,

supercache:key:<actual-key>), ensuring no conflicts with other Redis data.

-

Set for Each Tag (Tag-Key Sets):

- For every tag associated with a key, a Redis set is created to hold all the keys associated with that tag.

- Naming Convention: Each set is named with a pattern like

supercache:tag:<tag>:shard:<shard-number>, where<tag>is the tag name and<shard-number>is determined by the sharding algorithm. - These sets allow quick retrieval of all keys associated with a tag, facilitating efficient cache invalidation by tag.

-

Set for Each Key (Key-Tag Sets):

- Each cache key has a set that holds all the tags associated with that key.

- Naming Convention: The set is named as

supercache:tags:<key>, where<key>is the actual cache key. - This structure allows quick identification of which tags are associated with a key, ensuring efficient clean-up when a key expires.

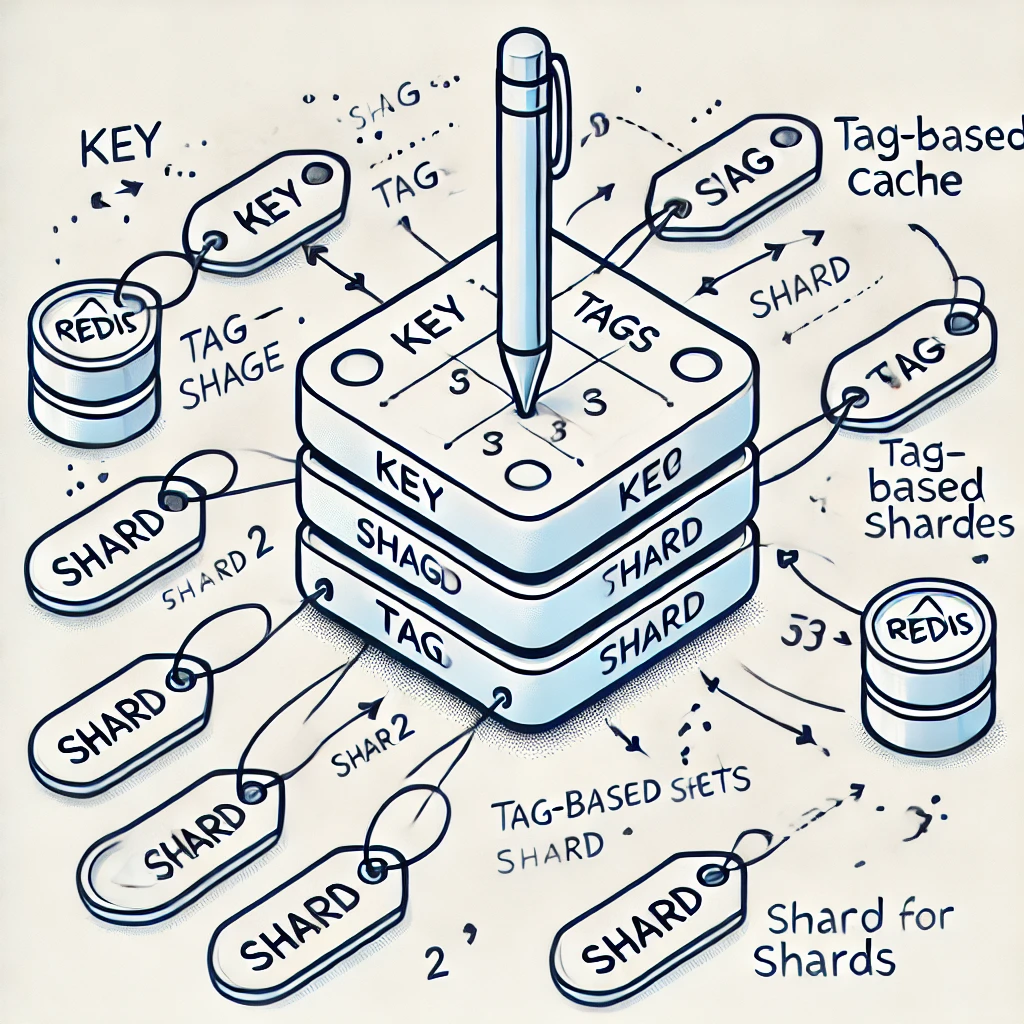

Sharding for Efficient Tag Management

To optimize performance when dealing with potentially large sets of keys associated with a single tag, laravel-super-cache employs a sharding strategy:

- Why Sharding?: A single tag might be associated with a large number of keys. If all keys for a tag were stored in a single set, this could degrade performance. Sharding splits these keys across multiple smaller sets, distributing the load.

- How Sharding Works: When a key is added to a tag, a fast hash function (e.g.,

crc32) is used to compute a shard index. The key is then stored in the appropriate shard for that tag.- Naming Convention for Sharded Sets: Each set for a tag is named as

supercache:tag:<tag>:shard:<shard-number>. - The number of shards is configurable through the

SUPERCACHE_NUM_SHARDSsetting, allowing you to balance between performance and memory usage.

- Naming Convention for Sharded Sets: Each set for a tag is named as

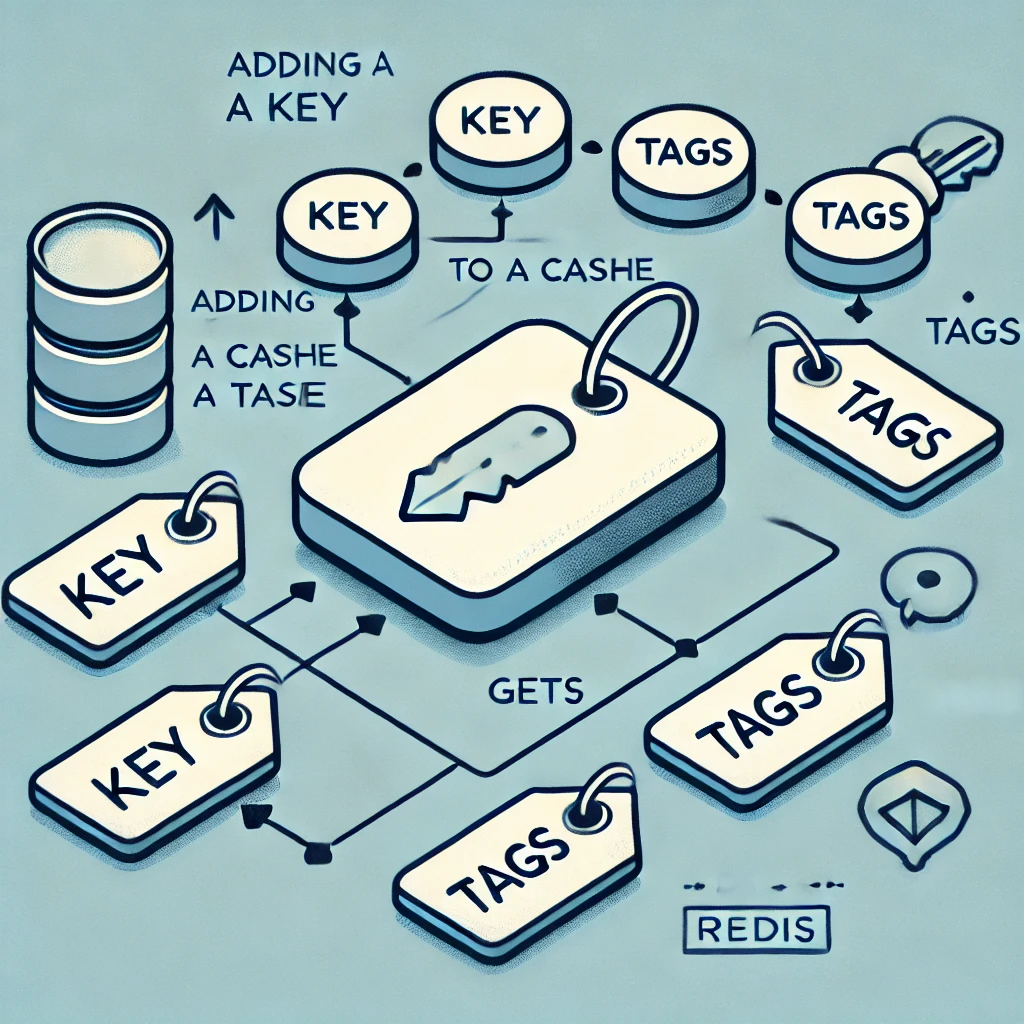

Example: Creating a Cache Key with Tags

When you create a cache key with associated tags, here's what happens:

- Key-Value Pair: A key-value pair is stored in Redis, prefixed as

supercache:key:<actual-key>. - Tag-Key Sets: For each tag associated with the key:

- A shard is determined using a hash function.

- The key is added to the corresponding sharded set for the tag, named as

supercache:tag:<tag>:shard:<shard-number>.

- Key-Tag Set: A set is created to associate the key with its tags, named as

supercache:tags:<key>.

This structure allows efficient lookup, tagging, and invalidation of cache entries.

Example

Suppose you cache a key product:123 with tags electronics and featured. The following structures would be created in Redis:

-

Key-Value Pair:

supercache:key:product:123-><value>

-

Tag-Key Sets:

- Assuming

SUPERCACHE_NUM_SHARDSis set to 256, andproduct:123hashes to shard42forelectronicsand shard85forfeatured:supercache:tag:electronics:shard:42-> containssupercache:key:product:123supercache:tag:featured:shard:85-> containssupercache:key:product:123

- Assuming

-

Key-Tag Set:

supercache:tags:product:123-> containselectronics,featured

Benefits of This Architecture

- Efficient Lookups and Invalidation: Using sets for both tags and keys enables quick lookups and invalidation of cache entries when necessary.

- Scalable Performance: The sharding strategy distributes the keys associated with tags across multiple sets, ensuring performance remains high even when a tag has a large number of keys.

- Atomic Operations: When a cache key is added or removed, all necessary operations (like updating sets and shards) are executed atomically, ensuring data consistency.

By following this architecture, laravel-super-cache is designed to handle high-volume cache operations efficiently while maintaining the flexibility and scalability needed for large enterprise applications.

Sharding for Efficient Tag Management

Tags are distributed across multiple shards to optimize performance.

When a key is associated with a tag, it is added to a specific shard determined by a fast hashing function (crc32).

This sharding reduces the performance bottleneck by preventing single large sets from slowing down the cache operations.

Tags are distributed across multiple shards to optimize performance.

When a key is associated with a tag, it is added to a specific shard determined by a fast hashing function (crc32).

This sharding reduces the performance bottleneck by preventing single large sets from slowing down the cache operations.

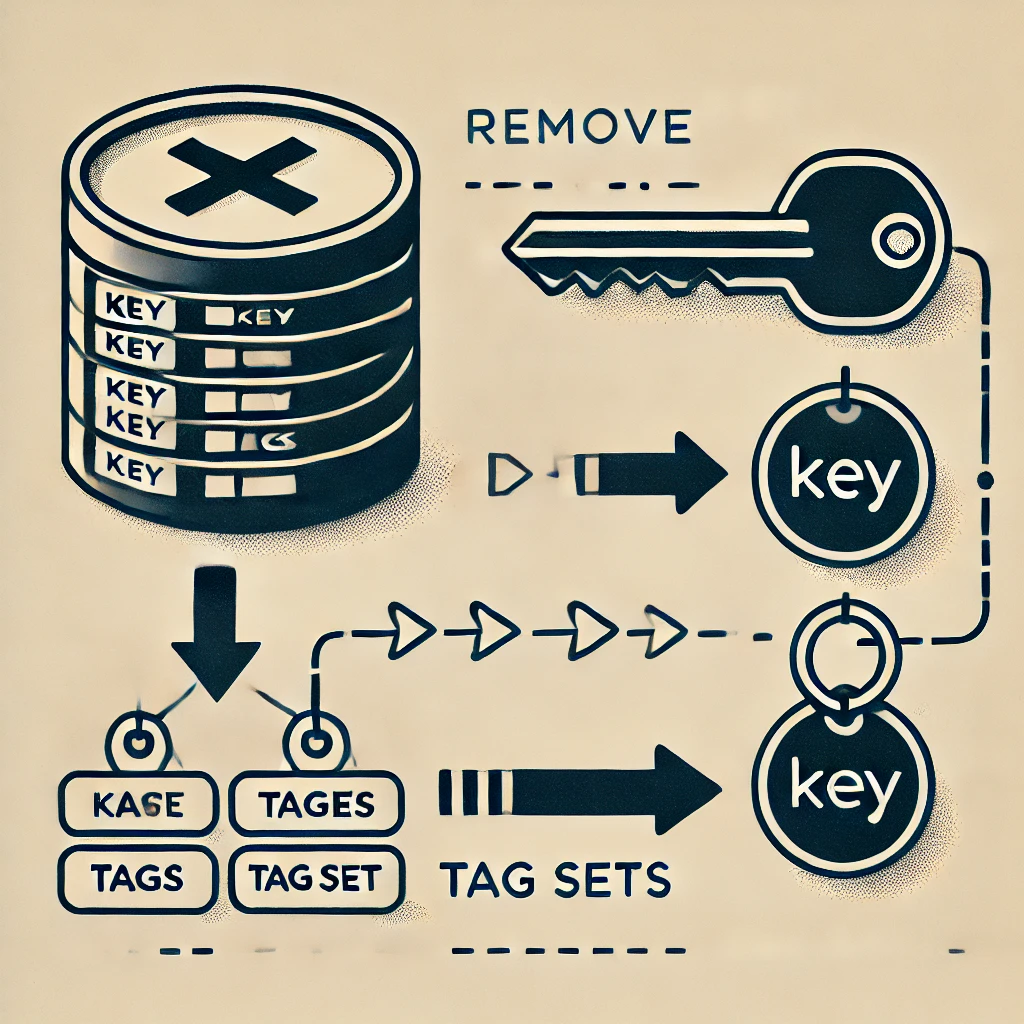

Locks for Concurrency Control

When a key is processed for expiration, an optimistic lock is acquired to prevent race conditions, ensuring that no concurrent process attempts to alter the same key simultaneously. The lock has a short TTL to ensure it is quickly released after processing.

When a key is processed for expiration, an optimistic lock is acquired to prevent race conditions, ensuring that no concurrent process attempts to alter the same key simultaneously. The lock has a short TTL to ensure it is quickly released after processing.

Namespace Suffix for Parallel Processing

To handle high volumes of expiry notifications efficiently, the listener processes them in parallel by suffixing namespaces to keys. Each process handles notifications for a specific namespace, allowing for scalable parallel processing. The listener uses batch processing with Lua scripting to optimize performance.

Redis Expiry Notifications and Listener System

Handling Expire Correctly with TTLs

To ensure keys are deleted from Redis when they expire, a combination of TTL and expiry notifications is used. If a key expires naturally, the listener is notified to clean up any associated tag sets. This ensures no "orphan" references are left in the cache.

To ensure keys are deleted from Redis when they expire, a combination of TTL and expiry notifications is used. If a key expires naturally, the listener is notified to clean up any associated tag sets. This ensures no "orphan" references are left in the cache.

Listener for Expiry Notifications

The listener is responsible for handling expired cache keys and cleaning up associated tags efficently by using mixed technics LUA script + batch processing + pipeline.

You can run multiple processes in parallel by setting different {namespace} values. This allows the listener to handle notifications in parallel for optimal performance.

The listener is responsible for handling expired cache keys and cleaning up associated tags efficently by using mixed technics LUA script + batch processing + pipeline.

You can run multiple processes in parallel by setting different {namespace} values. This allows the listener to handle notifications in parallel for optimal performance.

To ensure that keys are cleaned up efficiently upon expiration, laravel-super-cache uses Redis expiry notifications in combination with a dedicated listener process. This section explains how the notification system works, how the listener consumes these events, and the benefits of using batching, pipelines, and Lua scripts for performance optimization.

Redis Expiry Notifications: How They Work

Redis has a mechanism to publish notifications when certain events occur. One of these events is the expiration of keys (EXPIRED). The laravel-super-cache package uses these expiry notifications to clean up the tag associations whenever a cache key expires.

Enabling Expiry Notifications in Redis:

- Redis must be configured to send

EXPIREDnotifications. This is done by setting thenotify-keyspace-eventsparameter to includeEx(for expired events). - When a key in Redis reaches its TTL (Time-To-Live) and expires, an

EXPIREDevent is published to the Redis notification channel.

The Listener: Consuming Expiry Events

The supercache:listener command is a long-running process that listens for these EXPIRED events and performs clean-up tasks when they are detected. Specifically, it:

- Subscribes to Redis Notifications: The listener subscribes to the

EXPIREDevents, filtered by a specific namespace to avoid processing unrelated keys. - Accumulates Expired Keys in Batches: When a key expires, the listener adds it to an in-memory batch. This allows for processing multiple keys at once rather than handling each key individually.

- Processes Batches Using Pipelines and Lua Scripts: Once a batch reaches a size or time threshold, it is processed in bulk using Redis pipelines or Lua scripts for maximum efficiency.

Performance Benefits: Batching, Pipeline, and Lua Scripts

-

Batching in Memory:

- What is it? Instead of processing each expired key as soon as it is detected, the listener accumulates keys in a batch.

- Benefits: Reduces the overhead of individual operations, as multiple keys are processed together, reducing the number of calls to Redis.

-

Using Redis Pipelines:

- What is it? A pipeline in Redis allows multiple commands to be sent to the server in one go, reducing the number of network round-trips.

- Benefits: Processing a batch of keys in a single pipeline operation is much faster than processing each key individually, as it minimizes network latency.

-

Executing Batch Operations with Lua Scripts:

- What is it? Lua scripts allow you to execute multiple Redis commands atomically, ensuring that all operations on a batch of keys are processed as a single unit within Redis.

- Benefits:

- Atomicity: Ensures that all related operations (e.g., removing a key and cleaning up its associated tags) happen together without interference from other processes.

- Performance: Running a Lua script directly on the Redis server is faster than issuing multiple commands from an external client, as it reduces the need for multiple network calls and leverages Redis’s internal processing speed.

Parallel Processing with Namespaces

To improve scalability, the listener allows processing multiple namespaces in parallel. Each listener process is assigned to handle a specific namespace, ensuring that the processing load is distributed evenly across multiple processes.

- Benefit: Parallel processing enables your system to handle high volumes of expired keys efficiently, without creating a performance bottleneck.

Example Workflow: Handling Key Expiry

- Key Expiration Event: A cache key

product:123reaches its TTL and expires. Redis publishes anEXPIREDevent for this key. - Listener Accumulates Key: The listener process receives the event and adds

product:123to an in-memory batch. - Batch Processing Triggered: Once the batch reaches a size threshold (e.g., 100 keys) or a time threshold (e.g., 1 second), the listener triggers the batch processing.

- Batch Processing with Lua Script:

- A Lua script is executed on Redis to:

- Verify if the key is actually expired (prevent race conditions).

- Remove the key from all associated tag sets.

- Delete the key-tag association set.

- This entire process is handled atomically by the Lua script for consistency and performance.

- A Lua script is executed on Redis to:

Why This Approach Optimizes Performance

By combining batching, pipelining, and Lua scripts, the package ensures:

- Reduced Network Overhead: Fewer round-trips between your application and Redis.

- Atomic Operations: Lua scripts guarantee that all necessary operations for a key's expiry are handled in a single atomic block.

- Efficient Resource Utilization: Memory batching allows for efficient use of system resources, processing large numbers of keys quickly.

- Parallel Scalability: By using multiple listeners across namespaces, your system can handle large volumes of expirations without creating performance bottlenecks. This approach provides a robust, scalable, and highly performant cache management system for enterprise-grade Laravel applications.

Scheduled Command for Orphaned Key Cleanup

Optionally, a scheduled command can be configured to periodically clean up any orphaned keys or sets left due to unexpected interruptions or errors. This adds an additional safety net to maintain consistency across cache keys and tags.

php artisan supercache:clean

This can be scheduled using Laravel's scheduler to run at appropriate intervals, ensuring your cache remains clean and optimized.

Conclusion

With laravel-super-cache, your Laravel application can achieve enterprise-grade caching performance with robust tag management, efficient key invalidation, and seamless parallel processing. Enjoy a faster, more reliable cache that scales effortlessly with your application's needs.

Change log

Please see CHANGELOG for more information what has changed recently.

Testing

composer test

Contributing

Please see CONTRIBUTING for details.

Security

If you discover any security related issues, please email instead of using the issue tracker.

Credits

About Padosoft

Padosoft (https://www.padosoft.com) is a software house based in Florence, Italy. Specialized in E-commerce and web sites.

License

The MIT License (MIT). Please see License File for more information.