neuron-core / neuron-laravel

Official Neuron AI Laravel SDK.

Installs: 4 222

Dependents: 0

Suggesters: 0

Security: 0

Stars: 99

Watchers: 1

Forks: 11

Open Issues: 1

pkg:composer/neuron-core/neuron-laravel

Requires

- php: ^8.2

- illuminate/console: ^10.0|^11.0|^12.0

- illuminate/contracts: ^10.0|^11.0|^12.0

- illuminate/support: ^10.0|^11.0|^12.0

- neuron-core/neuron-ai: ^2.10

Requires (Dev)

- friendsofphp/php-cs-fixer: ^3.75

- larastan/larastan: ^3.0

- livewire/livewire: ^3.7

- orchestra/testbench: ^10.0

- rector/rector: ^2.2

- tomasvotruba/type-coverage: ^2.0

Suggests

- inspector-apm/inspector-laravel: ^4.18.2

README

Important

Get early access to new features, exclusive tutorials, and expert tips for building AI agents in PHP. Join a community of PHP developers pioneering the future of AI development. Subscribe to the newsletter

In this package we provide you with a development kit specifically designed for Laravel integration points without limiting the access to the Neuron native components. You can also use this package as an inspiration to design your own custom integration pattern.

This package aims to make it easier for Laravel developers to get started with AI agent development using Neuron AI framework. Neuron doesn't need invasive abstractions, it already has a very simple syntax, 100% typed code, and clear interfaces you can rely on to develop your agentic system or create custom plugins and extensions.

What this package provides

- A ready-to-use configuration file for AI providers, embeddings providers credentials

- A few artisan commands to create the most used components and reduce boilerplate code

- Facades to automatically instantiate providers and vector stores

- Ready-to-run migrations if you want to use the EloquentChatHistory component

- AI coding assistant guidelines integrated with Laravel Boost to help AI write better code

What is Neuron?

Neuron is the leading PHP framework for creating and orchestrating AI Agents. It allows you to integrate AI entities in your PHP applications with a powerful and flexible architecture. We provide tools for the entire agentic application development lifecycle, from LLM interfaces, data loading, to multi-agent orchestration, monitoring and debugging. In addition, we provide tutorials and other educational content to help you get started using AI Agents in your projects.

Requirements

- PHP >= 8.2

- Laravel >= 10.x

Install

Install the latest version by:

composer require neuron-core/neuron-laravel

Configuration file

If you want to customize the configuration file beyond the environment variables, you can copy the package configuration file

in your project config/neuron.php folder:

php artisan vendor:publish --tag=neuron-config

Create an Agent

To create a new AI agent, run the following command:

php artisan neuron:agent MyAgent

This will create a new agent class in your app/Neuron/Agents folder with the name MyAgent.php and a couple of

basic methods inside.

Available Artisan Commands

The package ships with a few artisan commands to reduce boilerplate code and make the setup process easier for the most common Neuron AI components.

# Create an agent

php artisan neuron:agent MyAgent

# Create a RAG

php artisan neuron:rag MyRAG

# Create a tool

php artisan neuron:tool MyTool

# Create a workflow

php artisan neuron:workflow MyWorkflow

# Create a node

php artisan neuron:node CustomNode

AI Providers

Neuron allows you to implement AI agents using many different providers, like Anthropic, Gemini, OpenAI, Ollama, Mistral, and many more. Learn more about supported providers in the Neuron AI documentation: https://docs.neuron-ai.dev/the-basics/ai-provider

To get an instance of the AI Provider you want to attach to your agent, you can use the NeuronAI\Laravel\Facades\AIProvider facade.

namespace App\Neuron; use NeuronAI\Agent; use NeuronAI\SystemPrompt; use NeuronAI\Laravel\Facades\AIProvider; use NeuronAI\Providers\AIProviderInterface; class YouTubeAgent extends Agent { protected function provider(): AIProviderInterface { // return an instance of Anthropic, OpenAI, Gemini, Ollama, etc... return AIProvider::driver('anthropic'); } public function instructions(): string { return (string) new SystemPrompt(...config('neuron.system_prompt'); } }

You can configure the appropriate API key in your environment file:

# Support for: anthropic, gemini, openai, openai-responses, mistral, ollama, huggingface, deepseek NEURON_AI_PROVIDER=anthropic ANTHROPIC_KEY= ANTHROPIC_MODEL= GEMINI_KEY= GEMINI_MODEL= OPENAI_KEY= OPENAI_MODEL= MISTRAL_KEY= MISTRAL_MODEL= OLLAMA_URL= OLLAMA_MODEL= # And many others

You can see all the available providers in the documentation: https://docs.neuron-ai.dev/the-basics/ai-provider

RAG (embeddings & vector stores)

If you want to implement a RAG kind of system, the configuration file also allows you to configure the embedding provider and vector store you want to use in your RAG agents, and the connection parameters (API key, model, etc.).

Here is an example of how to configure the embedding provider and vector store in the RAG component:

namespace App\Neuron; use NeuronAI\Laravel\Facades\AIProvider; use NeuronAI\Laravel\Facades\EmbeddingProvider; use NeuronAI\Laravel\Facades\VectorStore; use NeuronAI\Providers\AIProviderInterface; use NeuronAI\RAG\Embeddings\EmbeddingsProviderInterface; use NeuronAI\RAG\RAG; use NeuronAI\RAG\VectorStore\VectorStoreInterface; class MyChatBot extends RAG { protected function provider(): AIProviderInterface { return AIProvider::driver('anthropic'); } protected function embeddings(): EmbeddingsProviderInterface { return EmbeddingProvider::driver('openai'); } protected function vectorStore(): VectorStoreInterface { return VectorStore::driver('file'); } }

You can go to the Neuron AI documentation to learn more about RAG and its available configuration options: https://docs.neuron-ai.dev/rag/rag

EloquentChatHistory

Neuron provides you with a built-in system to manage the memory of a chat session you perform with the agent. In many Q&A applications you can have a back-and-forth conversation with the LLM, meaning the application needs some sort of "memory" of past questions and answers, and some logic for incorporating those into its current thinking.

Here is the documentation: https://docs.neuron-ai.dev/the-basics/chat-history-and-memory

The package ships with a ready-to-use migration for the ElquentChatHistory component. Here is the command to copy the migration

in your project database/migrations/neuron folder:

php artisan vendor:publish --tag=neuron-migrations

And then run the migrations:

php artisan migrate --path=/database/migrations/neuron

Read more about Eloquent Chat History in the Neuron AI documentation: https://docs.neuron-ai.dev/the-basics/chat-history-and-memory#eloquentchathisotry

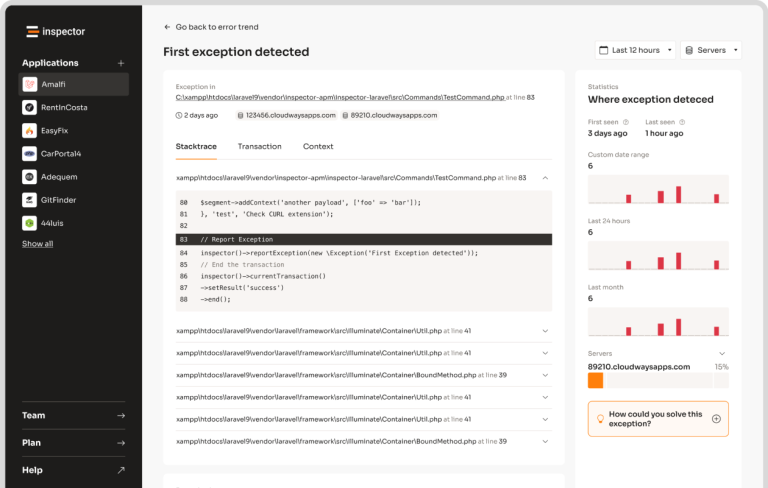

Monitoring & Debugging

Integrating AI Agents into your application, you’re not working only with functions and deterministic code, you program your agent also influencing probability distributions. Same input ≠ output. That means reproducibility, versioning, and debugging become real problems.

Many of the Agents you build with Neuron will contain multiple steps with multiple invocations of LLM calls, tool usage, access to external memories, etc. As these applications get more and more complex, it becomes crucial to be able to inspect what exactly your agent is doing and why.

Why is the model taking certain decisions? What data is the model reacting to? Prompting is not programming in the common sense. No static types, small changes break output, long prompts cost latency, and no two models behave exactly the same with the same prompt.

The best way to take your AI application under control is with Inspector. After you sign up,

make sure to set the INSPECTOR_INGESTION_KEY variable in the application environment file to start monitoring your agents:

INSPECTOR_INGESTION_KEY=fwe45gtxxxxxxxxxxxxxxxxxxxxxxxxxxxx

After configuring the environment variable, you will see the agent execution timeline in your Inspector dashboard.

Learn more about Monitoring in the documentation.