outl1ne / nova-openai

OpenAI SDK for a Laravel application that also stores OpenAI communication and presents it in a Laravel Nova admin panel.

Installs: 4 927

Dependents: 0

Suggesters: 0

Security: 0

Stars: 6

Watchers: 1

Forks: 0

Open Issues: 1

pkg:composer/outl1ne/nova-openai

Requires

- php: ^8.1

- guzzlehttp/guzzle: ^7.8

- laravel/framework: ^11.0|^12.0

- laravel/nova: ^5.0

- outl1ne/nova-translations-loader: ^5.0

Requires (Dev)

- nova-kit/nova-devtool: ^1.8

- phpunit/phpunit: ^11.1

- dev-main

- 0.18.0

- 0.17.7

- 0.17.6

- 0.17.5

- 0.17.4

- 0.17.3

- 0.17.2

- 0.17.1

- 0.17.0

- 0.16.0

- 0.15.0

- 0.14.1

- 0.14

- 0.13.1

- 0.13.0

- 0.12.1

- 0.12.0

- 0.11.1

- 0.11.0

- 0.10.16

- 0.10.15

- 0.10.14

- 0.10.13

- 0.10.12

- 0.10.11

- 0.10.10

- 0.10.9

- 0.10.8

- 0.10.7

- 0.10.6

- 0.10.5

- 0.10.4

- 0.10.3

- 0.10.2

- 0.10.1

- 0.10.0

- 0.9.2

- 0.9.1

- 0.9.0

- 0.8.0

- 0.7.2

- 0.7.1

- 0.7.0

- 0.6.3

- 0.6.2

- 0.6.1

- 0.6.0

- 0.5.4

- 0.5.3

- 0.5.2

- 0.5.1

- 0.5.0

- 0.4.6

- 0.4.5

- 0.4.4

- 0.4.3

- 0.4.2

- 0.4.1

- 0.4.0

- 0.3.3

- 0.3.2

- 0.3.1

- 0.3.0

- 0.2.2

- 0.2.1

- 0.2.0

- 0.1.0

- dev-nova/4

- dev-feature/add-responses-api

- dev-nova-5

- dev-at-branch-2

- dev-branch

- dev-feature/ability-to-hide-costs

- dev-guzzle-client-exception-logging

- dev-Virtual-branch

- dev-assistants-v2

- dev-update-streamhandler-chunks-filter-join

- dev-update-openai-pricing-models

- dev-wip-streaming-support

- dev-fix/nova-cache-badge-missing

- dev-add-gpt-4-turbo-2024-04-09-model

- dev-add-request-timeout-configuration

- dev-update-response-format-handling

- dev-update-embedding-storage-model-branch

- dev-update-text-embedding-version

- dev-update-embedding-pricing-models

- dev-add-storing-callback-to-capability

- dev-attaching-listing-deleting-files

- dev-update-openai-table-columns

- dev-fix-assistant-name-escaping

- dev-add-threads-assistant-message-response

- dev-add-files-capability-changes

- dev-model-pricing-update-turbo-gpt-4

- dev-calculate-embedding-pricing-models

- dev-update-openai-sdk-structure

- dev-update-pricing-path-logic

- dev-update-pricing-path-logic-branch

- dev-change-cost-calculation-data-type

- dev-create-modify-delete-assistant-branch

- dev-should-store

- dev-update-run-response-usage-parsing

- dev-Add-threads-capability-to-openai

- dev-feature/add-factory-and-request-data

- dev-Update-rate-limit-class

- dev-fix/headers-error

- dev-Refactor-openai-capabilities

- dev-update-readme

- dev-feature/update-readme

- dev-pricing

This package is auto-updated.

Last update: 2026-02-08 11:41:29 UTC

README

OpenAI SDK for a Laravel application that also stores OpenAI communication and presents it in a Laravel Nova admin panel.

This is currently an unstable package, breaking changes are to be expected. The main objective at the moment is to make it easier to include it as a dependency in our own client projects. This way we can make it more mature and have a v1 release when we feel it suits our needs in practice. This is why we haven't implemented all OpenAI endpoints yet and will add them one-by-one when they are actually needed.

If you need any features to be implemented or bump its priority in our backlog then feel free to make an inquiry via email at info@outl1ne.com.

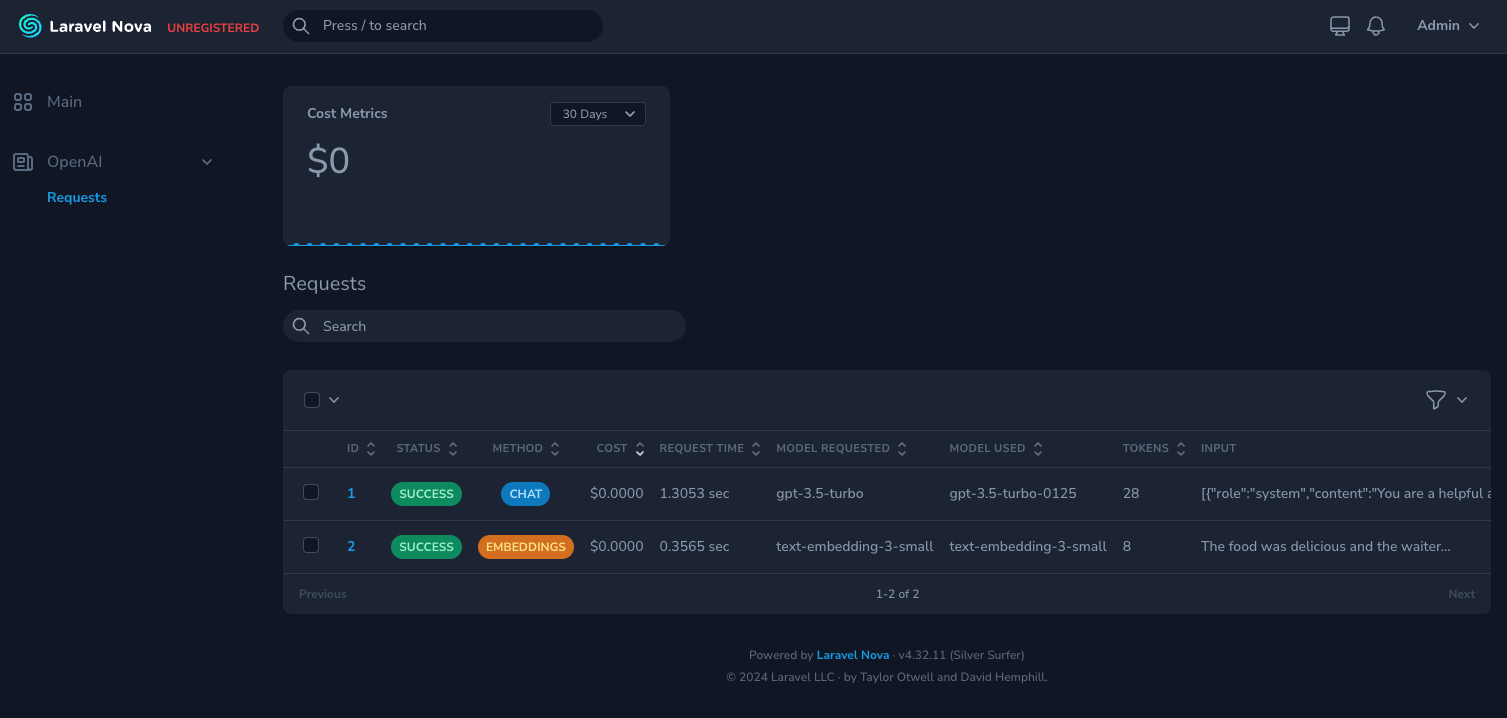

Screenshots

Requirements

php: >=8.1laravel/nova: ^4.0

Installation

# Install nova-openai composer require outl1ne/nova-openai # Run migrations php artisan migrate # Publish config file (optional) php artisan vendor:publish --tag=nova-openai-config

Register the tool with Nova in the tools() method of the NovaServiceProvider:

// in app/Providers/NovaServiceProvider.php public function tools() { return [ \Outl1ne\NovaOpenAI\NovaOpenAI::make(), ]; }

Usage

Assistants

$assistant = OpenAI::assistants()->create( 'gpt-3.5-turbo', 'Allan\'s assistant', 'For testing purposes of nova-openai package.', 'You are a kindergarten teacher. When asked a questions, anwser shortly and as a young child could understand.' ); $assistantModified = OpenAI::assistants()->modify($assistant->id, null, 'Allan\'s assistant!'); $deletedAssistant = OpenAI::assistants()->delete($assistant->id); // dd($assistant->response->json(), $assistantModified->response->json(), $deletedAssistant->response->json());

Attaching, listing and deleting files.

$assistant = OpenAI::assistants()->create( 'gpt-3.5-turbo', 'Allan\'s assistant', 'For testing purposes of nova-openai package.', 'You are a kindergarten teacher. When asked a questions, anwser shortly and as a young child could understand.', [ [ 'type' => 'retrieval', ], ], ); $file = OpenAI::files()->upload( file_get_contents('files/file.txt'), 'file.txt', 'assistants', ); $assistantFile = OpenAI::assistants()->files()->create($assistant->id, $file->id); $assistantFiles = OpenAI::assistants()->files()->list($assistant->id); $deletedAssistantFile = OpenAI::assistants()->files()->delete($assistant->id, $file->id); // Cleanup $deletedAssistant = OpenAI::assistants()->delete($assistant->id); $deletedFile = OpenAI::files()->delete($file->id); // dd( // $assistantFile->response->json(), // $assistantFiles->response->json(), // $deletedAssistantFile->response->json(), // );

Chat

$response = OpenAI::chat()->create( model: 'gpt-3.5-turbo', messages: Messages::make()->system('You are a helpful assistant.')->user('Hello!'), )->json();

Enable JSON response formatting:

$response = OpenAI::chat()->create( model: 'gpt-3.5-turbo', messages: Messages::make()->system('You are a helpful assistant.')->user('Suggest me tasty fruits as JSON array of fruits.'), responseFormat: ResponseFormat::make()->json(), )->json();

JSON Structured Outputs example:

$response = OpenAI::chat()->create( model: 'gpt-4o-mini', messages: Messages::make()->system('You are a helpful assistant.')->user('Suggest me 10 tasty fruits.'), responseFormat: ResponseFormat::make()->jsonSchema( JsonObject::make() ->property('fruits', JsonArray::make()->items(JsonString::make())) ->property('number_of_fruits_in_response', JsonInteger::make()) ->property('number_of_fruits_in_response_divided_by_three', JsonNumber::make()) ->property('is_number_of_fruits_in_response_even', JsonBoolean::make()) ->property('fruit_most_occurring_color', JsonEnum::make()->enums(['red', 'green', 'blue'])) ->property( 'random_integer_or_string_max_one_character', JsonAnyOf::make() ->schema(JsonInteger::make()) ->schema(JsonString::make()) ), ), )->json();

With raw JSON schema:

$response = OpenAI::chat()->create( model: 'gpt-4o-mini', messages: Messages::make()->system('You are a helpful assistant.')->user('Suggest me tasty fruits.'), responseFormat: ResponseFormat::make()->jsonSchema([ 'name' => 'response', 'strict' => true, 'schema' => [ 'type' => 'object', 'properties' => [ 'fruits' => [ 'type' => 'array', 'items' => [ 'type' => 'string', ], ], ], 'additionalProperties' => false, 'required' => ['fruits'], ], ]), )->json();

Streaming

$response = OpenAI::chat()->stream(function (string $newChunk, string $message) { echo $newChunk; })->create( model: 'gpt-3.5-turbo', messages: Messages::make()->system('You are a helpful assistant.')->user('Hello!'), );

Embeddings

$response = OpenAI::embeddings()->create( 'text-embedding-3-small', 'The food was delicious and the waiter...' ); // dd($response->embedding);

If you are storing the embedding vectors already somewhere else in your application then you might want to disable storing it within this package via passing a callback function with storing(fn ($model) => $model) method.

$response = OpenAI::embeddings()->storing(function ($model) { $model->output = null; return $model; })->create( 'text-embedding-3-small', 'The food was delicious and the waiter...' );

Files

Uploading file, retrieving it and deleting it afterwards.

$file = OpenAI::files()->upload( file_get_contents('files/file.txt'), 'file.txt', 'assistants', ); $files = OpenAI::files()->list(); $file2 = OpenAI::files()->retrieve($file->id); $deletedFile = OpenAI::files()->delete($file->id); // dd($file->response->json(), $file2->response->json(), $deletedFile->response->json());

Retrieving a file content.

$fileContent = OpenAI::files()->retrieveContent($file->id);

Vector Stores

$filePath = __DIR__ . '/../test.txt'; $file = OpenAI::files()->upload( file_get_contents($filePath), basename($filePath), 'assistants', ); $vectorStore = OpenAI::vectorStores()->create([$file->id]); $vectorStores = OpenAI::vectorStores()->list(); $vectorStoreRetrieved = OpenAI::vectorStores()->retrieve($vectorStore->id); $vectorStoreModified = OpenAI::vectorStores()->modify($vectorStore->id, 'Modified vector store'); $vectorStoreDeleted = OpenAI::vectorStores()->delete($vectorStore->id);

Threads

$assistant = OpenAI::assistants()->create( 'gpt-3.5-turbo', 'Allan', 'nova-openai testimiseks', 'You are a kindergarten teacher. When asked a questions, anwser shortly and as a young child could understand.' ); $thread = OpenAI::threads() ->create(Messages::make()->user('What is your purpose in one short sentence?')); $message = OpenAI::threads()->messages() ->create($thread->id, ThreadMessage::user('How does AI work? Explain it in simple terms in one sentence.')); $response = OpenAI::threads()->run()->execute($thread->id, $assistant->id)->wait()->json(); // cleanup $deletedThread = OpenAI::threads()->delete($thread->id); $deletedAssistant = OpenAI::assistants()->delete($assistant->id); // dd( // $assistant->response->json(), // $thread->response->json(), // $message->response->json(), // $run->response->json(), // $messages->response->json(), // $deletedThread->response->json(), // $deletedAssistant->response->json(), // );

Images

$images = OpenAI::images()->generate( prompt: 'Cute Otter', model: 'gpt-image-1', size: '1024x1024', ); $edited = OpenAI::images()->edit( prompt: 'Add glasses to the otter', model: 'gpt-image-1', size: '1024x1024', image: fopen(storage_path("cute_otter.jpg"), "r"), );

Testing

You can use the OpenAIRequest factory to create a request for testing purposes.

$mockOpenAIChat = Mockery::mock(Chat::class); $mockOpenAIChat->shouldReceive('create')->andReturn((object) [ 'choices' => [ [ 'message' => [ 'content' => 'Mocked response' ] ] ], 'request' => OpenAIRequest::factory()->create() ]); OpenAI::shouldReceive('chat')->andReturn($mockOpenAIChat);

Contributing

composer install

testbench workbench:build

testbench serve