neuron-core / tokenizer

High-Performance Tokenizer implementation in PHP

Installs: 115

Dependents: 0

Suggesters: 0

Security: 0

Stars: 6

Watchers: 0

Forks: 1

Open Issues: 0

pkg:composer/neuron-core/tokenizer

Requires

- php: ^8.1

Requires (Dev)

- friendsofphp/php-cs-fixer: ^3.75

- phpstan/phpstan: ^2.1

- phpunit/phpunit: ^9.0

- rector/rector: ^2.0

- tomasvotruba/type-coverage: ^2.0

This package is auto-updated.

Last update: 2026-02-21 11:36:12 UTC

README

composer require neuron-core/tokeinzer

BPE (Byte-Pair Encoding) Tokenizer

This implementation requires an already trained vocabulary and merges files. It's focused on performance with cache strategy and priority queue.

$tokenizer = new BPETokenizer(); // Initialize pre-trained tokenizer $tokenizer->loadFrom( __DIR__.'/../dataset/bpe-vocabulary.json', __DIR__.'/../dataset/bpe-merges.txt' ); // Tokenize text $tokens = $tokenizer->encode('Hello world!'); var_dump($tokens);

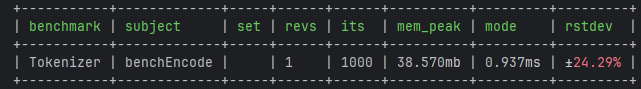

Benchmarks

The benchmarks are done with PHPBench, and they show a sub-millisecond performance encoding the example text below for a thousand times:

Contrary to popular belief, Lorem Ipsum is not simply random text. It has roots in a piece of classical Latin literature from 45 BC, making it over 2000 years old. Richard McClintock, a Latin professor at Hampden-Sydney College in Virginia, looked up one of the more obscure Latin words, consectetur, from a Lorem Ipsum passage, and going through the cites of the word in classical literature, discovered the undoubtable source. Lorem Ipsum comes from sections 1.10.32 and 1.10.33 of "de Finibus Bonorum et Malorum" (The Extremes of Good and Evil) by Cicero, written in 45 BC. This book is a treatise on the theory of ethics, very popular during the Renaissance. The first line of Lorem Ipsum, "Lorem ipsum dolor sit amet..", comes from a line in section 1.10.32.

The standard chunk of Lorem Ipsum used since the 1500s is reproduced below for those interested. Sections 1.10.32 and 1.10.33 from "de Finibus Bonorum et Malorum" by Cicero are also reproduced in their exact original form, accompanied by English versions from the 1914 translation by H. Rackham.

With the already trained GPT-2 datasets: